Mastering Sitemaps and Robots.txt: Boost Your SEO Today

In today’s digital world, it’s more important than ever to ensure that your website is easily accessible and navigable for both users and search engines. One of the key aspects of optimizing your site for search engine visibility is ensuring you’ve got properly configured sitemap.xml, HTML sitemap, and robots.txt files. These elements play a critical role in how search engines like Google crawl and index your site, ultimately determining how visible your content is to potential visitors.

But what exactly are these files, and why should you care about them? In a nutshell, they’re essential tools for communicating with search engines and directing them on how to interact with your site. By providing a roadmap of your site’s structure (sitemap) and specifying which areas should be off-limits (robots.txt), you’ll improve the efficiency of crawling and ensure that search engines understand the most important content on your site. So let’s dive into the importance of these elements and how to make the most out of them for optimal SEO performance.

Fundamentals Of Sitemap.Xml And HTML Sitemap

To truly grasp the importance of sitemap.xml and HTML sitemap, it’s essential to understand their fundamentals. These elements serve as a roadmap for search engines, helping them navigate and index your website more efficiently. Sitemap.xml is an XML file that lists all the URLs on your site along with metadata such as last modification date and priority. This format is easily readable by search engine crawlers, allowing them to discover new or updated content on your site quickly. On the other hand, an HTML sitemap is a human-readable version that usually appears in the footer of your website, providing visitors with an organized overview of your site’s structure.

While both sitemaps contribute to the overall user experience, they play distinct roles in search engine optimization (SEO). By providing search engines with a clear and concise guide to your website’s content, you can improve its visibility in search results and drive more organic traffic. A well-structured sitemap XML ensures that search engine crawlers can easily access and index every page on your site, increasing its chances of ranking higher for relevant keywords. Meanwhile, an HTML sitemap enhances user experience by offering easy navigation for visitors, which can reduce bounce rates and signal to search engines that your website is valuable to users.

The Impact of HTML Sitemap on User Experience and Website Engagement

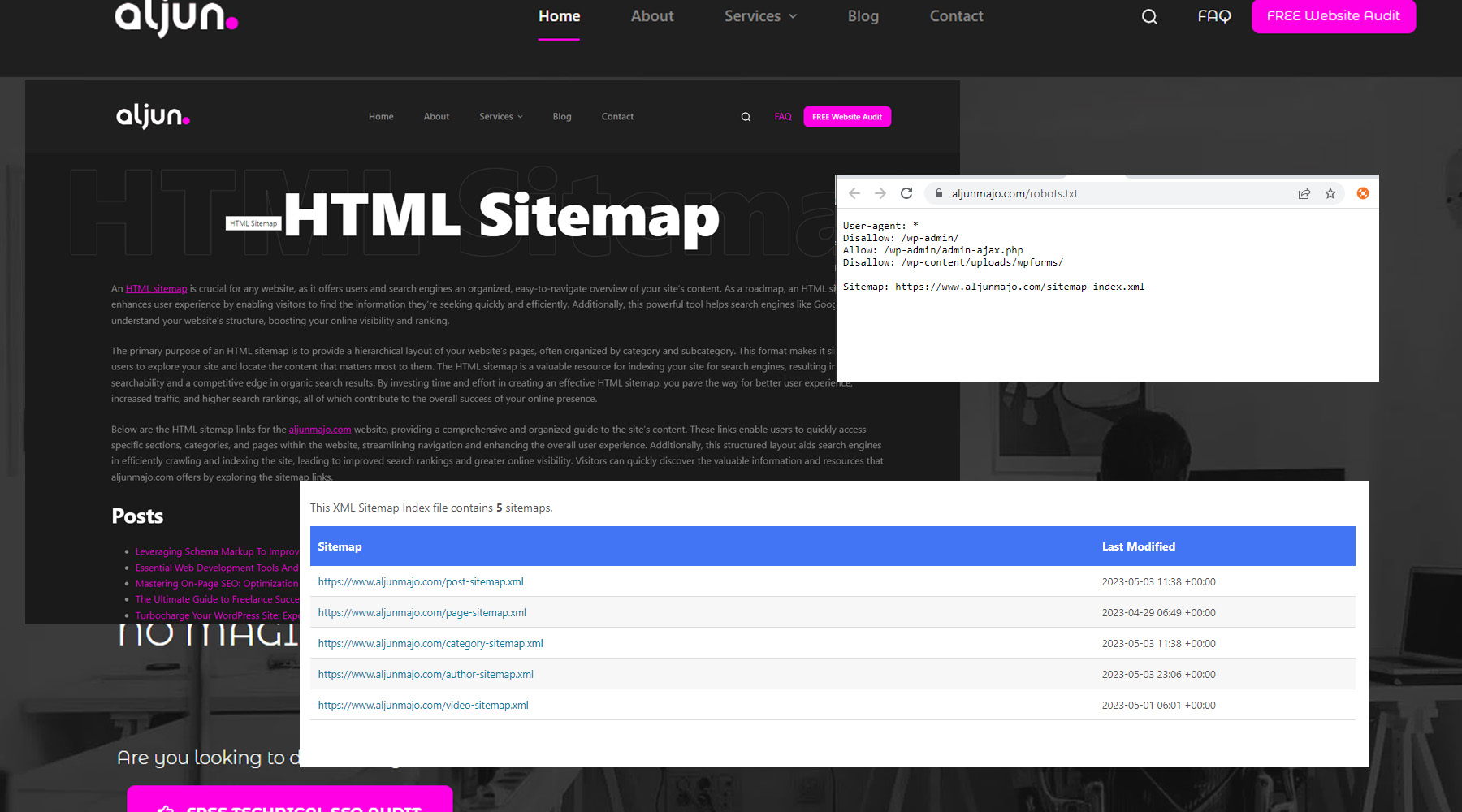

An HTML sitemap(this website HTML Sitemap) is essential for elevating user experience on any website. Providing a clear, organized overview of a site’s content simplifies navigation and allows visitors to quickly find the information they seek. As a result, they’re more likely to remain on the site longer and explore additional pages, which can lead to increased engagement, conversions, and, ultimately, customer satisfaction.

Furthermore, a well-designed HTML sitemap can enhance a website’s accessibility, making it more user-friendly for individuals with disabilities. For instance, screen readers or other assistive technologies can easily navigate through the sitemap to locate specific pages, ensuring that all users can enjoy a seamless browsing experience. This inclusive approach benefits your site’s users and reflects positively on your brand’s reputation.

Effective internal linking is another advantage provided by an HTML sitemap. By connecting related content within your site, you can guide visitors through a logical progression of information, addressing their questions and concerns systematically. This helps users better understand your content and encourages them to explore more of your site, thereby increasing page views and deepening their connection with your brand.

An HTML sitemap is a powerful tool for optimizing the user experience on your website. By simplifying navigation, enhancing accessibility, and improving internal linking, your site can provide a seamless and enjoyable browsing experience that can ultimately lead to higher engagement, conversions, and brand loyalty.

Unlocking the Power of Sitemap.xml for Enhanced Website Performance

The significance of a sitemap.xml file in a website’s success lies in its ability to streamline communication with search engines. As a structured blueprint of your website’s pages and content, a sitemap.xml file enables search engines to crawl efficiently, index, and ultimately rank your site. By providing search engines with an up-to-date overview of your site’s structure, you pave the way for improved visibility and higher search engine rankings.

Another crucial aspect of sitemap.xml files is their capacity to expedite the discovery of new or updated content. When you add or modify pages on your website, a sitemap.xml file ensures that search engines are promptly notified of these changes. Consequently, your content gets indexed more quickly, allowing you to stay ahead of the competition and maintain a fresh, relevant online presence.

Sitemap.xml files also prove invaluable when dealing with large or complex websites. In such cases, a sitemap.xml file helps search engines navigate through intricate site structures and uncover deep or orphaned pages that might be overlooked. This comprehensive indexing ensures that your entire site is considered by search engines, maximizing your chances of achieving high rankings and attracting organic traffic.

Creating and Configuring Your Sitemap Files

Delving deeper into the world of website optimization, crafting and fine-tuning your sitemap files is an essential aspect not to be overlooked. Sitemaps, both XML and HTML versions, and the robots.txt file are instrumental in guiding search engines through your site’s structure and ensuring that they efficiently crawl and index your content.

The Role Of Robots.txt In Website Optimization

Undoubtedly, the significance of robots.txt in website optimization cannot be overstated. As a crucial component in directing search engine crawlers, this small text file helps prioritize and index your website’s content more efficiently. By specifying which sections of your site should be crawled or ignored, you can ensure that search engines index the most valuable content for your target audience. Consequently, this has a direct impact on your website’s visibility and ranking on search engine results pages.

A well-crafted robots.txt file aids in optimizing the crawl budget and prevents duplicate content issues, which contributes to better SEO performance. Furthermore, it protects sensitive data by restricting access to specific areas of your site. By taking full advantage of the benefits provided by robots.txt files, you can achieve a strategic edge over competitors and improve the overall user experience for visitors to your website. Thus, incorporating robots.txt alongside sitemap.xml and HTML sitemaps is essential to maximizing your website’s potential to attract organic traffic and achieve higher search rankings.

To create and configure your sitemap files, start by generating a comprehensive list of all URLs on your site. There are various tools available online to facilitate this process, such as the free XML Sitemaps Generator. Once you have compiled a list of all your web pages, proceed to create an XML sitemap following the standard protocol outlined by sitemaps.org. This will help search engines like Google and Bing quickly discover all of your site’s content. In addition to the XML sitemap, it is beneficial to create an HTML version for human visitors who may need assistance navigating through your website. As for the robots.txt file, ensure that it accurately specifies which areas of your site should be crawled or omitted by search engine bots. By paying close attention to these details and regularly updating your sitemap files as new content is added or old content is removed from your site, you will be well on your way to reaping the rewards of effective website optimization.

Implementing An Effective Robots.txt Strategy

Having established the significance of creating and configuring your sitemap files, it’s now time to explore another equally important aspect of website optimization – implementing an effective robots.txt strategy. Just like sitemap files, robots.txt plays a crucial role in guiding search engines through your website and ensuring optimal crawling and indexing.

A well-crafted robots.txt file serves as a roadmap for search engine bots, allowing them to access specific parts of your website while restricting them from others. To create an effective strategy, start by identifying which sections of your site you want to be crawled and indexed and those you want to keep hidden from search engines. Then, use the “Allow” and “Disallow” directives in your robots.txt file to grant or deny access accordingly. Additionally, don’t forget to include the location of your sitemap.xml file in the robots.txt using the “Sitemap” directive. This will make it easier for search engines to find and crawl your sitemap more efficiently. Remember that a properly configured robots.txt file improves your site’s SEO and helps protect sensitive information from being inadvertently exposed on search engine results pages.

Monitoring And Updating Your Sitemap And Robots.txt Files

It’s vital not only to create and implement sitemap.xml, HTML sitemap, and robots.txt files but also to monitor and update them regularly. As your website grows and evolves, these files need to accurately reflect the current structure, content, and crawl preferences for search engines.

To keep your sitemap.xml and HTML sitemap up-to-date, consider using automated tools or plugins to generate these files as you add new pages or modify existing ones. For robots.txt, perform regular audits to ensure that any changes in your site’s architecture or content are reflected in the file’s directives. By staying on top of these updates, you’ll be better equipped to maintain optimal visibility on search engines and enhance user experience on your site.

Frequently Asked Questions

Can I Use A Single Sitemap For Multiple Websites Or Subdomains, Or Do I Need To Create Separate Sitemaps For Each?

When using a single sitemap for multiple websites or subdomains, creating separate sitemaps for each domain or subdomain is generally recommended. Search engines treat subdomains as distinct entities with their own content and structure. By creating individual sitemaps, you can ensure that search engines accurately index and understand the organization of each site, improving visibility and search performance. Additionally, having separate sitemaps allows you to manage and update the content on each website more effectively, making it easier to prioritize indexing requests based on changes made to specific sites.

How Do I Prioritize Pages Within My Sitemap For Search Engines To Index And Crawl More Frequently?

To prioritize pages within your sitemap for search engines to index and crawl more frequently, you should utilize the “priority” attribute in your sitemap.xml file. By assigning a higher priority value (between 0.0 and 1.0) to specific pages, you can signal to search engines that these pages are comparatively more important than others on your website. Remember that this is a relative measure, and different search engines may interpret the priority values differently. Additionally, regularly updating your content and ensuring proper internal linking can help crawlers prioritize certain pages over others.

Are There Any Specific Tools Available To Create And Manage Sitemaps And Robots.txt Files More Efficiently?

Yes, specific tools are available to create and manage sitemaps and robots.txt files more efficiently. These tools can streamline the process, making optimizing their site’s visibility for search engines easier for website owners. Some popular options include XML-Sitemaps.com, Google Search Console, Screaming Frog SEO Spider, and Rankmath SEO plugins for WordPress. These tools assist in generating sitemap.xml and sitemap.html files and creating and managing robots.txt files to ensure that your website is properly crawled and indexed by search engines.

How Can I Troubleshoot Issues With Sitemaps Or Robots.txt Files If Search Engines Are Not Crawling Or Indexing My Site As Expected?

To troubleshoot issues with sitemaps or robots.txt files if search engines are not crawling or indexing your site as expected, start by checking your sitemap for errors using online tools such as Google Search Console or Bing Webmaster Tools. Ensure the sitemap is submitted to these platforms and follows the proper format (XML for sitemap.xml and HTML for sitemap.html – example of HTML Sitemap). Next, verify that your robots.txt file is correctly allowing search engine bots to access and index your site’s content; you can use a tool called “robots.txt Tester” in Google Search Console. Additionally, inspect individual URLs using the URL Inspection Tool in Google Search Console to identify specific indexing issues. If problems persist, consider seeking help from web development forums or consulting an SEO expert to identify and resolve the issue.

What Are Some Best Practices To Ensure My Sitemap And Robots.txt Files Remain Up-To-Date When My Website Undergoes Frequent Content Updates Or Structural Changes?

To ensure your sitemap and robots.txt files remain up-to-date when your website undergoes frequent content updates or structural changes, it’s crucial to implement an automated process to update these files as new pages are added, or old ones are removed. Utilize a sitemap generator tool that automatically creates and updates your sitemaps in XML and HTML. Keep your robots.txt file well-organized with clear directives, including the location of your sitemap.xml file, using the “Sitemap:” directive. Regularly audit and test these files for errors or inconsistencies and monitor search engine crawl reports identifying any potential issues in real-time. By following these best practices, you can maintain an accurate representation of your site for search engines and improve overall indexing performance.

Conclusion

In essence, diligently maintaining your sitemap and robots.txt files is critical for ensuring search engines can effectively crawl and index your website. To sustain an optimized online presence, it is imperative to prioritize your pages, create separate sitemaps for various domains, and utilize the tools available to you.

If you encounter any issues, don’t hesitate to troubleshoot the problem and implement the necessary adjustments. Regularly updating these files is vital for maintaining a robust online presence and achieving higher search engine rankings. However, if you require expert assistance in this area, you may be interested in engaging the services of a professional Freelance Web Developer with Technical SEO expertise, like me.

My extensive experience and knowledge in web development and Technical SEO can provide the support and guidance you need to navigate the complex landscape of search engine optimization. By partnering with me, you can rest assured that your website will be optimized to its full potential, enabling you to stand out in today’s competitive digital world.

In conclusion, mastering the intricacies of sitemaps and robots.txt files is integral to enhancing your website’s search engine performance. By implementing these strategies and collaborating with a seasoned professional, you can elevate your online presence and achieve the desired results. Don’t hesitate to reach out if you are looking for a Freelance Web Developer with Technical SEO expertise to help boost your website’s SEO and unlock its full potential.